Current Research

Collaborative visual exploration of sentiment in tweets

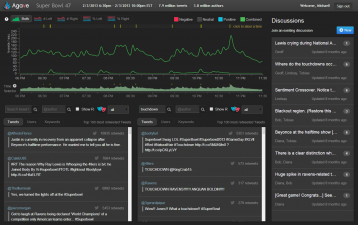

Social media platforms are an increasingly important source of data for social science research about individuals and groups. However, the size and complexity of social media data sets challenge traditional research methods. With other researchers in the Scientific Collaboration and Creativity Lab, I designed, developed, and evaluated Agave, a tool enabling a group of researchers to explore and analyze a large set of tweets.

Using Agave, researchers can compare and contrast multiple searches and filters, and get an overview of changes in tweet rate over time with details about the top tweets. Agave also shows the breakdown of tweets by sentiment (positive, neutral, or negative), and Agave supports collaboration through annotations and discussion posts that are linked to the data. Social interactions in Agave made it easier for researchers to "jump in" to explore an unfamiliar data set. Our main findings were published in a paper at CDVE 2014.

Affect detection in chat messages

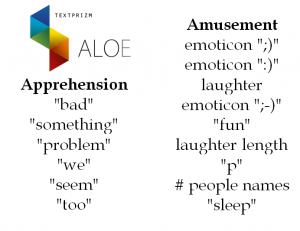

Affect and emotion are important in group communications and cooperative work, but the role of affect in computer supported cooperative work is rarely studied. Moreover, analysis of emotion beyond a superficial level typically requires painstaking human interpretation, which is expensive and slow.

I have worked with Cecilia Aragon's research group on emotion in text communication to develop machine learning tools to identify a variety of flavors of affect and emotion in large text communication data sets, such as logs of chat conversations. We are especially interested in how message context and textual features of chat messages can be leveraged for affect classification. A paper explaining our process and findings has been accepted at CSCW 2013, and we have open sourced ALOE, an implementation of the techniques we have developed.

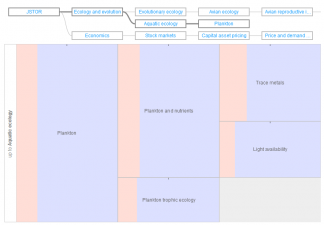

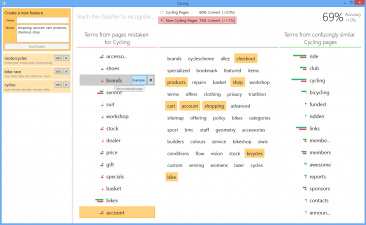

Visual support for building text classification features

In machine learning, features are how the machine represents and understands the data. In general, features are thought to be one of the most important factors in building a successful machine learning system. However, there is very little support available to help with thinking of good features. In this internship project at Microsoft Research with Saleema Amershi, Bongshin Lee, Steven Drucker, Ashish Kapoor, and Patrice Simard, we explored an approach to feature ideation based on visual summaries of sets of misclassified data.

We designed and built a tool, FeatureInsight, which helps the user explore web pages misclassified by a text classifier and interactively build new features out of sets of related words. We ran an experiment to evaluate the effects of two general strategies for feature ideation implemented in FeatureInsight, finding that visual summaries were helpful for building better classifiers.

Past Research

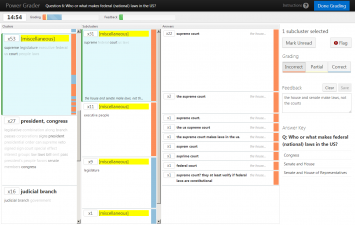

Clustered short-answer grading for MOOCs

In massive online courses, assessment is a major challenge, and many such courses rely on multiple choice questions because they are easy to grade. However, open-ended forms of assessment, like short answers or essays, have greater value to students and teachers. In this internship project at Microsoft Research with Sumit Basu, Lucy Vanderwende, and Chuck Jacobs, we developed a cluster-based interface for reading, grading, and giving feedback on large groups of similar answers at once.

In an online evaluation with 25 teachers, we compared our cluster-based prototype to an unclustered baseline, and found that the clustered interface allowed teachers to grade faster, give more feedback, and develop a high-level view of student's misunderstandings. We published our research at ACM Learning at Scale 2014.

Hoptree visual hierarchy navigation system

Do you ever forget where you are in your file system, or while using a complicated multi-page website? Hierarchical information structures are ubiquitous and important in business, research, and everyday life.

Hoptrees are a visual navigation interface I developed in collaboration with Jevin West, Carl Bergstrom, and Cecilia Aragon. Hoptrees make it easier for people to keep track of where they are and where they’ve been when navigating hierarchical information. For example, they could help you avoid getting lost in your file system, or on a complex hierarchical website. Check out our demo and source code!

Design and evaluation of an eye tracking biometric system

Most people use passwords many times each day, despite severe usability problems. It is hard to remember strong passwords, and writing down or re-using passwords compromises security. Given how many passwords people need these days, finding alternative security mechanisms is a priority.

Advised by Cecilia Aragon, I led the user-centered design, development, and evaluation of a novel biometric authentication technology that uses an eye tracker to identify individuals based on unique features of people's eye movements. I used an iterative design process involving paper prototypes and working prototypes that use a Tobii eye tracker to record gaze data. I ran a usability evaluation to understand the usability of the system relative to a standard PIN authentication system. We published our results at ICB 2013.

Qualitative study of communication in language classrooms

The potential benefits of computational tools for people learning other languages has been studied extensively over the past 50 years. However, in most language classrooms, technology adoption is minimal. Additionally, many new technologies like tablet computers have not been as extensively studied by researchers in the field of Computer Assisted Language Learning.

As part of my Qualitative Research Methods course, two other students and I observed meetings of an introductory Russian class and interviewed several of the students. We analyzed the observational and interview data, constructing a grounded theory of artifact use and student interactions in introductory language classes. Because students in the class very often practiced scripted dialogs out of the textbook, we believe that there is potential for new mobile technologies to support more dynamic, natural language practice in the classroom. We published our findings at CHI 2013 in Paris, with honorable mention for best paper.

A real-time visualization tool for musicians

Playing music is an intense cognitive and expressive task. It involves reading and interpreting music notation, planning the production of music, controlling the instrument (or voice) to produce the desired sound, and listening closely to help correct problems as they occur. This can make playing music frustrating, especially for beginning musicians. As a result, many musicians struggle with staying motivated, and many eventually quit.

As a long-time piano and flute student, I believe that music visualization tools could make practicing more fun, rewarding, and efficient, especially for beginners. For my undergraduate thesis project at Oberlin, I researched audio-processing techniques and created several working prototype visualizations. Taking advantage of the readily available musical talent at Oberlin, I collected feedback on the visualizations. The feedback was enthusiastic, but highlighted the need for further work and perhaps a participatory design process. More information is available on the project website.